[Continuing coverage of the UN’s 2015 conference on killer robots. See all posts in this series here.]

Before I start blogging the kickoff of this week’s United Nations meeting on killer robots, a little background is called for, both about the issue and my views on it.

I have worked on this issue in different capacities for many years now. (In fact, I proposed a ban on autonomous weapons as early as 1988, and again in 2002 and 2004.) In the present context, the first thing I want to say is about the Obama administration’s 2012 policy directive on Autonomy in Weapon Systems. It was not so much a decision made by the military as a decision made for the military after long internal resistance and at least a decade of debate within the U.S. Department of Defense. You may have heard that the directive imposed a moratorium on killer robots. It did not. Rather, as I explained in 2013 in the Bulletin of the Atomic Scientists, it “establishes a framework for managing legal, ethical, and technical concerns, and signals to developers and vendors that the Pentagon is serious about autonomous weapons.” As a Defense Department spokesman told me directly, the directive “is not a moratorium on anything.” It’s a full-speed-ahead policy.

|

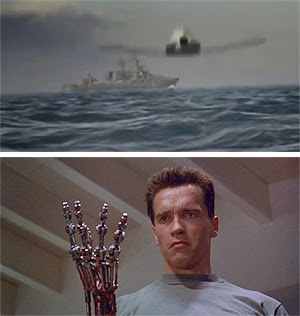

| What counts as “semi-autonomous”? Top: Artist’s conception of Lockheed Martin’s planned Long Range Anti-Ship Missile in flight. Bottom: The Obama administration would define the original T-800 Terminator as merely “semi-autonomous.” |

The story of how so many people misinterpreted or were misled by the directive is complicated, and I won’t get into details right now, but basically the policy was rather cleverly constructed by strong proponents of autonomous weapons to deflect concerns about actual emerging (and some existing) weaponry by suggesting that the real issue is futuristic machines that independently “select and engage” targets of their own choosing. These are supposedly placed under close scrutiny by the policy — but not really. The directive defines a separate category of “semi-autonomous” weapons which in reality includes everything that is happening today or is likely to happen in the near future as we head down the road toward Terminator territory. A prime example is Lockheed Martin’s Long Range Anti-Ship Missile, a program now entering “accelerated acquisition” with initial deployment slated for 2018. This wonder-weapon can autonomously steer itself around emergent threats, scan a wide area searching for an enemy fleet, identify target ships among civilian vessels and others in the vicinity, and plan its attack in collaboration with sister missiles in a salvo. It’s classified as “semi-autonomous,” which under the policy means it’s given a green light and does not require senior review. In fact, as I’ve argued, under the bizarre definition in the administration’s policy, The Terminator himself (excuse me, itself) could qualify as a merely “semi-autonomous” weapon system.

If it sounds like I’m casting the United States as the villain here, let me be clear: the rest of the world is in the game, and they’re right behind us, but we happen to be the leader, in both technology and policy. For every type of drone (and here I can be accused of conflating issues: today’s drones are not autonomous, although some call them semi-autonomous, but the existence of a close relationship between drone and autonomous weapons technologies is undeniable) that the United States has in use or development, China has produced a similar model, and when the U.S. Navy opened its Laboratory for Autonomous Systems Research in 2012, Russia responded by establishing its own military robotics lab the following year. Some have characterized Russia as “taking the lead,” but the reality is better characterized by the statement of a Russian academician that “From the point of view of theory, engineering and design ideas, we are not in the last place in the world.”

|

| The Big Dog that has Russia’s military leadership barking. |

At the 2014 LAWS meeting, Russian and Chinese statements were as bland and obtuse as their American counterparts, but it’s clear that, like the rest of the world, those countries are watching closely what we do, and showing that they are not ready to accept “last place.” Russian deputy prime minister Dmitry Rogozin, head of military industries, penned an article in Rossiya Gazeta in 2014 that amounts to perhaps the closest thing to an official Russian policy response to the publicly released U.S. directive: a clarion call to Russian industry, mired as it is in post-Soviet mediocrity, to step up to the challenge posed by American achievements like “Big Dog” and to develop “robotic systems that are fully integrated in the command and control system, capable not only to gather intelligence and to receive from the other components of the combat system, but also on their own strike.” China eschews such straightforwardly belligerent declarations, and interestingly, the Chinese closing statement at last year’s meeting rebuked the American suggestion to focus on the process of legality reviews for new weapons, on the grounds that this would exclude countries which did not yet have autonomous weapons to review — a suggestion of possible Chinese support for a more activist approach to arms control. But China’s activity in areas of drones, robots, and artificial intelligence speak for themselves; China will not accept last place either.

My question for those setting U.S. policy is this: Given that we are the world’s leader in this technology, but with only a narrow lead at best, why are we not at least trying to lead in a different direction, away from a global robot arms race? Why are we not saying that, of course, we will develop autonomous weapons if necessary, but we would prefer an arms-control approach, based on strong moral principles and the overwhelming sentiment of the world’s people (including strong majorities among U.S. military personnel)? Why not? Why are we not even signaling interest in such an approach? Comments are open, fellas.

In the days to come, I’ll report on both the expert talks and country statements, and whatever else I see going on in Geneva, as well as dig deeper into the underlying issues as they come up. More tomorrow…

I am in complete agreement here, control the situation (the need to go to war) before creating all of the pieces in a real-life game of Risk using our tax dollars and risking our lives in a different type of global war.