Yesterday, on Jeopardy!, a computer handily beat its human competitors. Stephen Gordon asks, “Did the Singularity Just Happen on Jeopardy?” If so, then I think it’s time for me and my co-bloggers to pack up and go home, because the Singularity is damned underwhelming. This was one giant leap for robot publicity, but only a small step for robotkind.

Unlike Deep Blue, the IBM computer that in 1997 defeated the world chess champion Garry Kasparov, I saw no indication that the Jeopardy! victory constituted any remarkable innovation in artificial intelligence methods. IBM’s Watson computer is essentially search engine technology with some basic natural language processing (NLP) capability sprinkled on top. Most Jeopardy! clues contain definite, specific keywords associated with the correct response — such that you could probably Google those keywords, and the correct response would be contained somewhere in the first page of results. The game is already very amenable to what computers do well.

In fact, Stephen Wolfram shows that you can get a remarkable amount of the way to building a system like Watson just by putting Jeopardy! clues straight into Google:

Once you’ve got that, it only requires a little NLP to extract a list of candidate responses, some statistical training to weight those responses properly, and then a variety of purpose-built tricks to accommodate the various quirks of Jeopardy!-style categories and jokes. Watching Watson perform, it’s not too difficult to imagine the combination of algorithms used.

Compiling Watson’s Errors

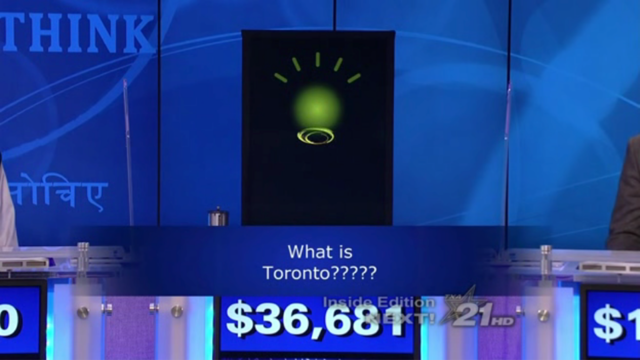

On that large share of search-engine-amenable clues, Watson almost always did very well. What’s more interesting to note is the various types of clues on which Watson performed very poorly. Perhaps the best example was the Final Jeopardy clue from the first game (which was broadcast on the second of three nights). The category was “U.S. Cities,” and the clue was “Its largest airport is named for a World War II hero; its second largest, for a World War II battle.” Both of the human players correctly responded Chicago, but Watson incorrectly responded Toronto — and the audience audibly gasped when it did.

Watson performed poorly on this Final Jeopardy because there were no words in either the clue or the category that are strongly and specifically associated with Chicago — that is, you wouldn’t expect “Chicago” to come up if you were to stick something like this clue into Google (unless you included pages talking about this week’s tournament). But there was an even more glaring error here: anyone who knows enough about Toronto to know about its airports will know that it is not a U.S. city.

There were a variety of other instances like this of “dumb” behavior on Watson’s part. The partial list that follows gives a flavor of the kinds of mistakes the machine made, and can help us understand their causes.

- With the category “Beatles People” and the clue “‘Bang bang’ his ‘silver hammer came down upon her head,’” Watson responded, “What is Maxwell’s silver hammer.” Surprisingly, Alex Trebek accepted this response as correct, even though the category and clue were clearly asking for the name of a person, not a thing.

- With the category “Olympic Oddities” and the clue “It was the anatomical oddity of U.S. gymnast George Eyser, who won a gold medal on the parallel bars in 1904,” Watson responded, “What is leg.” The correct response was, “What is he was missing a leg.”

- In the “Name the Decade” category, Watson at one point didn’t seem to know what the category was asking for. With the clue “Klaus Barbie is sentenced to life in prison & DNA is first used to convict a criminal,” none of its top three responses was a decade. (Correct response: “What is the 1980s?”)

- Also in the category “Name the Decade,” there was the clue, “The first modern crossword puzzle is published & Oreo cookies are introduced.” Ken responded, “What are the twenties.” Trebek said no, and then Watson rang in and responded, “What is 1920s.” (Trebek came back with, “No, Ken said that.”)

- With the category “Literary Character APB,” and the clue “His victims include Charity Burbage, Mad Eye Moody & Severus Snape; he’d be easier to catch if you’d just name him!” Watson didn’t ring in because his top option was Harry Potter, with only 37% confidence. His second option was Voldemort, with 20% confidence.

- On one clue, Watson’s top option (which was correct) was “Steve Wynn.” Its second-ranked option was “Stephen A. Wynn” — the full name of the same person.

- With the clue “In 2002, Eminem signed this rapper to a 7-figure deal, obviously worth a lot more than his name implies,” Watson’s top option was the correct one — 50 Cent — but its confidence was too low to ring in.

- With the clue “The Schengen Agreement removes any controls at these between most EU neighbors,” Watson’s first choice was “passport” with 33% confidence. Its second choice was “Border” with 14%, which would have been correct. (Incidentally, it’s curious to note that one answer was capitalized and the other was not.)

- In the category “Computer Keys” with the clue “A loose-fitting dress hanging from the shoulders to below the waist,” Watson incorrectly responded “Chemise.” (Ken then incorrectly responded “A,” thinking of an A-line skirt. The correct response was a “shift.”)

- Also in “Computer Keys,” with the clue “Proverbially, it’s ‘where the heart is,’” Watson’s top option (though it did not ring in) was “Home Is Where the Heart Is.”

- With the clue “It was 103 degrees in July 2010 & Con Ed’s command center in this N.Y. borough showed 12,963 megawatts consumed at 1 time,” Watson’s first choice (though it did have enough confidence to ring in) was “New York City.”

- In the category “Nonfiction,” with the clue “The New Yorker’s 1959 review of this said in its brevity & clarity it is ‘unlike most such manuals, a book as well as a tool.’” Watson incorrectly responded “Dorothy Parker.” The correct response was “The Elements of Style.”

- For the clue “One definition of this is entering a private place with the intent of listening secretly to private conversations,” Watson’s first choice was “eavesdropper,” with 79% confidence. Second was “eavesdropping,” with 49% confidence.

- For the clue “In May 2010 5 paintings worth $125 million by Braque, Matisse & 3 others left Paris’ museum of this art period,” Watson responded, “Picasso.”

We can group these errors into a few broad, somewhat overlapping categories:

- Failure to understand what type of thing the clue was pointing to, e.g. “Maxwell’s silver hammer” instead of “Maxwell”; “leg” instead of “he was missing a leg”; “eavesdropper” instead of “eavesdropping.”

- Failure to understand what type of thing the category was pointing to, e.g.,“Home Is Where the Heart Is” for “Computer Keys”; “Toronto” for “U.S. cities.”

- Basic errors in worldly logic, e.g. repeating Ken’s wrong response; considering “Steve Wynn” and “Stephen A. Wynn” to be different responses.

- Inability to understand jokes or puns in clues, e.g. 50 Cent being “worth” “more than his name implies”; “he’d be easier to catch if you’d just name him!” about Voldemort.

- Inability to respond to clues lacking keywords specifically associated with the correct respone, e.g. the Voldemort clue; “Dorothy Parker” instead of “The Elements of Style.”

- Inability to correctly respond to complicated clues that involve inference and combining facts in subsequent stages, rather than combining independent associated clues; e.g. the Chicago airport clue.

What these errors add up to is that Watson really cannot process natural language in a very sophisticated way — if it did, it would not suffer from the category errors that marked so many of its wrong responses. Nor does it have much ability to perform the inference required to integrate several discrete pieces of knowledge, as required for understanding puns, jokes, wordplay, and allusions. On clues involving these skills and lacking search-engine-friendly keywords, Watson stumbled. And when it stumbled, it often seemed not just ignorant, but completely thoughtless.

I expect you could create an unbeatable Jeopardy! champion by allowing a human player to look at Watson’s weighted list of possible responses, even without the weights being nearly as accurate as Watson has them. While Watson assigns percentage-based confidence levels, any moderately educated human will be immediately be able to discriminate potential responses into the three relatively discrete categories “makes no sense,” “yes, that sounds right,” and “don’t know, but maybe.” Watson hasn’t come close to touching this.

The Significance of Watson’s Jeopardy! Win

In short, Watson is not anywhere close to possessing true understanding of its knowledge — neither conscious understanding of the sort humans experience, nor unconscious, rule-based syntactic and semantic understanding sufficient to imitate the conscious variety. (Stephen Wolfram’s post accessibly explains his effort to achieve the latter.) Watson does not bring us any closer, in other words, to building a Mr. Data, even if such a thing is possible. Nor does it put us much closer to an Enterprise ship’s computer, as many have suggested.

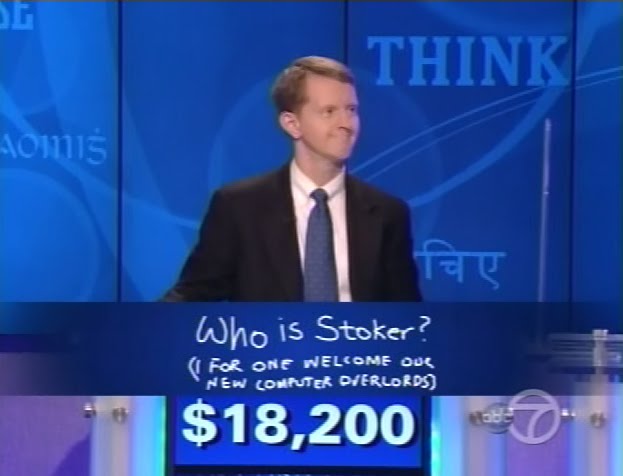

In the meantime, of course, there were some singularly human characteristics on display in the Jeopardy! tournament, and evident only in the human participants. Particularly notable was the affability, charm, and grace of Ken Jennings and Brad Rutter. But the best part was the touches of genuine, often self-deprecating humor by the two contestants as they tried their best against the computer. This culminated in Ken Jennings’s joke on his last Final Jeopardy response:

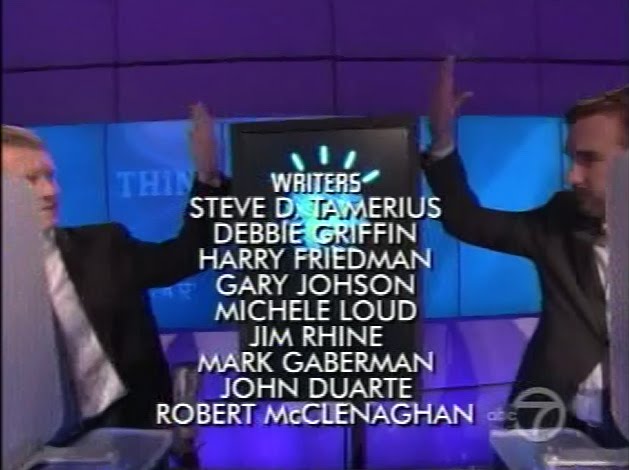

Nicely done, sir. The closing credits, which usually show the contestants chatting with Trebek onstage, instead showed Jennings and Rutter attempting to “high-five” Watson and show it other gestures of goodwill:

I’m not saying it couldn’t ever be done by a computer, but it seems like joking around will have to be just about the last thing A.I. will achieve. There’s a reason Mr. Data couldn’t crack jokes. Because, well, humor — it is a difficult concept. It is not logical. All the more reason, though, why I can’t wait for Saturday Night Live’s inevitable “Celebrity Jeopardy” segment where Watson joins in with Sean Connery to torment Alex Trebek.

Futurisms

February 17, 2011

I think a lot of this article is a bit is dumb. Disclaimer: I'm not super familiar with AI stuff and particularly NLP.

ARTICLE: "Unlike Deep Blue, the IBM computer that in 1997 defeated the world chess champion Garry Kasparov, I saw no indication that the Jeopardy! victory constituted any remarkable innovation in artificial intelligence methods."

This statement I have a huge problem with… because I *am* familiar with chess in general and somewhat familiar with computer chess in particular. (Although I wasn't particularly good, I used to play competitively; you can still look me up in the USCF ratings database if you know my full name, and I've got a few dozen games. I've also considered writing my own chess AI, and investigated what it would take.) And even after reading the Wikipedia article on Deep Blue to review, the only thing I can come up with that may have been new to is using a learning algorithm to improve the board evaluation function. (There were some other unique things about it like chess-specific hardware, but that's not really AI.) So I have no idea what "remarkable innovation" Deep Blue presented that you're talking about.

The way I look at Watson is this: if you gave me the computer from the USS Enterprise, wiped it of its software, then showed me how to program it and let me get the hang of it, then gave me a day, I'm virtually positive I could write a chess program that would beat Deep Blue. Heck, I might be able to do that on, say, one of IBM's Blue Gene/Ls, though not within a day. But if you gave me the Enterprise's computer and told me to write something that will beat Watson at Jeopardy, my response would be "okay, let me read NLP papers for a month" because I'd have little clue how to do it otherwise.

This isn't necessarily a good indication that Watson progressed from the state of the art… but to suggest that Deep Blue beats Watson in innovation seems dubious at best.

ARTICLE: "In fact, Stephen Wolfram shows that you can get a remarkable amount of the way to building a system like Watson just by putting Jeopardy! clues straight into Google"

Wolfram's statistics are not very relevant here: there's an enormous gap between showing you a page with the answer somewhere on it and actually producing a definitive answer. And even if you count Watson's harder task vs Google's easier task, Watson still cuts out about 1/3 of the missed answers. And tells you when it doesn't know, most of the time.

ARTICLE: "Watson performed poorly on this Final Jeopardy because there were no words in either the clue or the category that are strongly and specifically associated with Chicago — that is, you wouldn’t expect “Chicago” to come up if you were to stick something like this clue into Google (unless you included pages talking about this week’s tournament). But there was an even more glaring error here: anyone who knows enough about Toronto to know about its airports will know that it is not a U.S. city."

This was partially explained by one of the IBM folks. The explanation is that if the category is "U.S. Cities", that does NOT mean that the answer (question) is actually a US City, just that the clue is about a US City in some manner. (The example given was that a clue like "This river is west of New York City" would have fit fine in that category (though too easy the way I presented it).) And so while Watson does pay attention to the category (throughout the game), it's not used as a "type" of the answer.

If the "US" fact is moved into the clue: "This US city's largest airport…", they claim Watson gets it right.

ARTICLE: "Also in the category “Name the Decade,” there was the clue, “The first modern crossword puzzle is published & Oreo cookies are introduced.” Ken responsed, “What are the twenties.” Trebek said no, and then Watson rang in and responded, “What is 1920s.” (Trebek came back with, “No, Ken said that.”)"

This doesn't speak at all to Watson's NLP power or lack thereof at all, since it had no access to Ken's answer at all. Your categorization of that error alongside the Stephen Wynn and Stephen A. Winn thing is inappropriate; it was not a case of Watson considering "the 1920s" and "the twenties" as distinct.

ARTICLE: "In short, Watson is not anywhere close to possessing true understanding of its knowledge — neither conscious understanding of the sort humans experience, nor unconscious, rule-based syntactic and semantic understanding sufficient to imitate the conscious variety. "

Now this is definitely true. That said, no OTHER system is either, so you can't use that point to argue "Watson doesn't advance the state-of-the art."

In short, I think this analysis puts forth a shaky supposition from the start and then does a poor job at arguing it. I'm not qualified to judge how novel what IBM did was, nor how Jeopardy specific it is. (I suspect it's more Jeopardy-specific than IBM'd care to admit.) But nor am I convinced that this article does a good job.

One final comment:

ARTICLE: "In the meantime, of course, there were some singularly human characteristics on display in the Jeopardy! tournament, and evident only in the human participants. Particularly notable was the affability, charm, and grace of Ken Jennings and Brad Rutter. But the best part was the touches of genuine, often self-deprecating humor by the two contestants as they tried their best against the computer."

I also liked the time that Ken seemed to play off of Watson's wrong answer — when the clue was asking for a style of art, and Watson said "Picasso", Ken jumped right in and said "cubism".

There certainly has been an overload of attempts to cast Watson in a Kurzweilian light and it does not at all fit (I would blame Kurzweil more than Watson or the media covering it). I think significance is getting missed because of this. It isn't particularly fair to compare with search engines as they are quite sophisticated themselves and they don't exactly fit in the same sized cabinet as Watson does. It is important to note that Watson is not searching the Internet, but is using resources chosen ahead of time. This makes Watson more applicable to certain real world problems. It is precisely the task of extracting the important keywords from the clue and extracting the important part of the result that distinguishes Watson. I would not call this "a little NLP". Just as with Machine Vision it is the correct selection of data to throw away that distinguishes the successes from the failures. But most importantly the IBM team was able to measure their starting point with their first implementation (which probably was similar to the results you would get by scraping search engine results) and steadily improve the system.

This brings me to the point I want to make. What IBM has demonstrated is not they have made this thing called Watson that is going to solve our problems, but that they have a team of Computer Scientists that can work with an information domain and fine tune and refine a system to the point that it operates at expert level. The "unbeatable Jeopardy! champion" that you envision is exactly what IBM is looking to provide, a system that augments a person already knowledgeable in the domain.

Mr. Yates,

In response to your comment:

It isn't particularly fair to compare with search engines as they are quite sophisticated themselves and they don't exactly fit in the same sized cabinet as Watson does. It is important to note that Watson is not searching the Internet, but is using resources chosen ahead of time.

The comparison to search engines is entirely fair. No one disputes that search engines are sophisticated and are quite an accomplishment in and of themselves; the question is whether Watson is an innovation. Watson is clearly built upon what's effectively a search engine. It's true that it didn't have a connection to the Internet while playing the game, but that's not a significant technological accomplishment, because Watson effectively had a subset of the Internet cached in its memory — a subset, as you note, that was carefully chosen by humans. Nor is the size of the machine a significant accomplishment — the "cabinet" in which Watson fit was actually a large room hidden backstage, a pretty standard-size server room.

This brings me to the point I want to make. What IBM has demonstrated is not they have made this thing called Watson that is going to solve our problems, but that they have a team of Computer Scientists that can work with an information domain and fine tune and refine a system to the point that it operates at expert level.

This isn't new, either. Teams of computer scientists creating and refining computer systems to solve a specific problem is what computer scientists have been doing for as long as the profession has existed. (Which isn't to say that's all they've been doing.) And creating AI-based programs that are able to perform feats similar to human experts in very specific and complicated technical domains — that's been done since the 1980s.

I'm not at all denying that Watson is quite an accomplishment. It was indeed very impressive to watch. My point is that it's essentially applying existing AI methods to a new domain in which the power of those methods can really be expressed, and can become very publicly apparent. In other words, the biggest accomplishment here is having thought to put a computer on Jeopardy! in the first place.

But as far as I can tell, Watson did not exhibit a giant advance in natural language-processing abilities — which its creators emphasize is supposed to be its big technical feat. Watson can disambiguate word usage — as Ken Jennings wrote, "When it sees the word 'Blondie,' it's very good at figuring out whether Jeopardy! means the cookie, the comic strip, or the new-wave band" — and it has a few other similar capabilities; but those are a very small piece of the puzzle, and don't come anywhere close to truly understanding natural language. Again, I recommend Stephen Wolfram's post outlining the differences.

Mr. Driscoll,

Thanks for your two comments. Your objection to my post seems to be based on a broad-level assertion about the difficulty of designing something like Watson, and a variety of very specific objections to arguments I made. So I'll attempt to respond point-by-point to a few of your more substantial objections.

One note upfront: you've disclaimed that you're not very familiar with AI. While I don't want to argue from authority, I am actually quite familiar with AI — I've studied it academically and independently, and programmed it professionally — and my impression, for the reasons I've outlined, is that there is still an enormous gap in Watson's ability to understand natural language, and that Watson mostly uses existing AI and natural language process (NLP) capabilities in a novel way. This is not to discount the impressiveness of those techniques, and I think Watson is the finest display of them we've seen so far. I think if you were familiar with pre-Watson AI and NLP techniques, your impression would be similar. While I'm not an AI researcher, there are at least two respected AI researchers who have offered very similar assessments to mine. One is Ben Goertzel. Another, as I mentioned, is Stephen Wolfram, whose post offers the useful distinction between linguistic matching and linguistic understanding. As Wolfram notes, Watson does not attempt to form a definite internal representation of whole sentences; what it does is use some recognition of parts of speech and attributes of categories to adjust statistical matching techniques — and this means that it is an advancement on, but not different in kind from, techniques that have been around for decades.

(continued in the next comment…)

(…continued from the previous comment)

Now to a few specific points:

…if the category is "U.S. Cities", that does NOT mean that the answer (question) is actually a US City, just that the clue is about a US City in some manner…. And so while Watson does pay attention to the category (throughout the game), it's not used as a " type" of the answer.

There are some Jeopardy categories in which you can't tell until you see the clue whether the category is a sort of hint, or whether it's actually telling you the type of the answer. But there are plenty of categories that restrict the type of response, and I think "U.S. Cities" for a Final Jeopardy clue is clearly one of them. It's doubtful, also, that Watson would or could have the ability to distinguish based on the context of the clue itself whether the clue is meant to determine the type of the response. Perhaps it's true that Watson would have gotten the question right if it had been reformulated as you describe, but it's still clear that Watson has severe shortcomings in its ability to understand natural language, and that most of its work happens by statistical association rather than by employing internal representations of predicates and whole sentences.

[Watson repeating Ken's wrong answer] doesn't speak at all to Watson's NLP power or lack thereof at all, since it had no access to Ken's answer at all.

True, but I just categorized this as "errors in worldly logic." It doesn't seem insignificant that Watson has no idea what's going on in the game aside from being fed the textual questions and the state of the question and score boards. If they had fed Watson the other players' answers, it would have been trivial for the programmers to add a simple "if (previous player answered this) then (eliminate this as a possible answer)" command. Even so, that would speak to the program's nature as a fine-tuned, rule-based statistical system rather than an instance of general intelligence.

"In short, Watson is not anywhere close to possessing true understanding of its knowledge…"

Now this is definitely true. That said, no OTHER system is either, so you can't use that point to argue "Watson doesn't advance the state-of-the art."

That was a conclusion from, not the basis of, my argument that Watson doesn't advance the state-of-the-art (nor was I saying it entirely doesn't. And I just wanted to specifically emphasize that Watson doesn't come close to achieving artifical general intelligence or true understanding of natural language, as many have been implying.

But if you gave me the Enterprise's computer and told me to write something that will beat Watson at Jeopardy, my response would be "okay, let me read NLP papers for a month" because I'd have little clue how to do it otherwise.

I'm not sure I entirely understand your point here, but to the extent I do, it seems you've got it just backwards. The Enterprise computer is the idealized realization of a non-conscious machine with complete natural language processing capability. This allows people to communicate with the computer using natural language, both to control the ship, and to ask questions of a machine with access to basically all available knowledge. It's unclear whether it has much intelligence, so I don't know how much luck you'd have using the computer's own programming to make a chess champion (though it could probably tell you how). But the Enterprise computer is already the perfect, generalized Jeopardy-playing machine. You could take a look at its programming to make a Watson — or you could trivially create a Watson by writing the one-line command: "Send the following query to the Enterprise computer: 'Computer, to what question is this sentence the answer: [Jeopardy clue]?'"

Watson's ability to understand natural language, and that Watson mostly uses existing AI and natural language process (NLP) capabilities in a novel way. This is not to discount the impressiveness of those techniques, and I think Watson is the finest display of them we've seen so far.

That's fair enough. And I don't dispute some of the things you say later about things like "Watson doesn't have a true understanding of language". I probably counted your opinion of Watson as more negative than it actually is.

"there are plenty of categories that restrict the type of response, and I think "U.S. Cities" for a Final Jeopardy clue is clearly one of them"

So I looked through a ton of games here to get an idea of how often a category like that would be used with answers that aren't actually what the clue says. And while they almost always are, it isn't a sure thing. There was one game with a "U.S. Cities" category in double jeopardy where the question for the $2000 clue was "what is Maine?" And other things like a "national historic sites" category having a question asking for "minuteman" missles and the "Brown v Topeka Board of Education" supreme court case, a "National Parks" catagory where one question asked what mountain range Yosemite is in, and even an entire "National Parks of the World" category where each clue was just the name of the park and the question was the name of the country it was in. (Alex did provide an explanation for that, so it only sort of counts.)

I'm not sure I entirely understand your point here, but to the extent I do, it seems you've got it just backwards. The Enterprise computer is the idealized realization of a non-conscious machine with complete natural language processing capability.

Sorry, I wasn't very clear. I originally wrote this response for a reply to a friend who shared the article over RSS on Google Reader, and decided I might as well post it here too. I made some minor changes, and a key bit got lost in this part: what I originally wrote was "if you gave me the computer from the USS Enterprise, wiped it of its software, then showed me how to program it…".

So what I meant by "the computer from the USS Enterprise" was basically just "give me lots of cycles". (I'm sort of assuming the NLP part of that computer system is "software only".)

So phrased a different way, what I was trying to argue was that I think most undergrad CS graduates could write a chess AI that, given a sufficiently fast comptuer, could reasonably easily write an unbeatable chess AI. (Taken to the extreme, if the computer is infinitely fast, you don't even need to do anything remotely intelligent at all: just expand out the whole minimax tree. If there's a library with board representation, "get the available moves" function, etc. available, this would take what, a few minutes?)

By contrast, it seems to the somewhat lay observer that even some "generic NLP researcher" (who hasn't put specific thought into solving Jeopardy) would still have very difficult time beating Watson in a "somewhat-timed" contest, even given as much computing power as they want. (By "somewhat-timed" I mean it has an almost-guaranteed time period of a few seconds to think.) It'd become even more difficult if Watson's software was allowed to run on the same machine.

(I've got a few more comments I'll post in a moment; I suspect I'm near the character limit.)

The other thing to keep in mind is that it's almost never the case that someone invents some brand new technique out of the blue. Even for "groundbreaking projects", if you go and look at the papers that proceeded them (whether they be by the same or different groups), they're almost always most of the way there. This is why we can have even seemingly-complex, revolutionary things like calculus be independently invented by two people at the same time.

And so you say that Watson is "it is an advancement on, but not different in kind from, techniques that have been around for decades"… but this is true of essentially everything out there. I'd give $50 to someone who could demonstrate that Wolfram Alpha is fundamentally different from things that have been around for a couple decades. (Of course the catch there is that it's sort of my opinion of what counts as "fundamentally different.") Wolfram is not the first to try a less statistical approach to NLP, of course.

The big question in my mind is not "how big of an advance is Watson", because in the narrow space of Jeopardy, I think it's pretty clearly an enormous advance. The big question is "how generally applicable is the technology underlying Watson?"

If the evidence-weighing and scoring functions they have turn out to be general, then it seems to me all this "they're taking existing techniques and appling it in a different setting" argument, no matter how true, is basically meaningless. What I fear is that this won't be the case.

Me thinks the author doth protest too much.

Let's bottom line this. This article takes every opportunity to point out weaknesses and failures in Watson from the perspective of what the author believes Watson should have been doing to be proven successful and does not consider the actual stated goal from IBM that was being tested.

Per IBM, "The goal is to build a computer that can be more effective in understanding and interacting in natural language, but not necessarily the same way humans do it."

The reason this is significant, especially compared to Deep Blue, is that a computer is inherently good at processing the 1 and 0's required to analyze a chess game and the finite legal moves available. Those moves are extremely finite when compared to the diversity of human language and, I might add, especially American English which is a conglomerate of many languages and dialects, not to mention slang and syntax.

But let's also take a look at the article's claims about Deep Blue. Deep Blue DID NOT win it's first match against Kasparov, it simply one a single game and tied twice thus losing by a score of 4 to 2. It was then re-designed, re-built and officially renamed Deeper Blue. In the subseqent match Deep(er) Blue beat Kasparov in what was a very close match scoring 3 1/2 to 2 1/2. Not exactly the decisive victory of Watson.

The point is clear, Watson is very significant because it attempts, and has deomonstrated significant success, at doing what computers are NOT good at. Which is understanding human language. Was the success limited? Of course it was. Just as was Deep Blue's, which the Author gives as a valid example of a significant computer break-through. So again, why is Watson 's achievment not significant?

Further, the author says search engines do to a pretty good job of what Watson was designed to do. Well, if the goal was to find the right answer this arguement would have some validity, but since the goal to was to process language, that is take information, research that information, then provide a resonably valid answer, then this is far off the mark. A search engine sorts and prioritizes the research. At no point does it take the research and summarize the data into an attempt to provide an answer. Google points you in the right direction and we are required to evaluate the answers and look for the answer we need.

No, the author got this backwards. Deep Blue and Deeper Blue were an affirmation of what we already new computers did well and perhaps proof that programmers were getting better at taking advantage of that ability. Watson is the first significant step towards a computer that truly processes human language. It was far from perfect, but if you watch enough Jeopardy you'll see humans making similiar errors. And this was IBM's first demonstration. I can't wait for Watson 2.0!

Methinks the commenter doth protest too much! Unfortunately, Zyradul, your comment seems based on a lack of knowledge about the history and methods of artificial intelligence.

First, to the pedantic question about whether Deep Blue or Deeper Blue beat Garry Kasparov: of course Deep Blue, like every piece of software, had many iterations to improve upon the failings of the initial version. But "Deeper Blue" was just a joke nickname IBM came up with for the second version that played Kasparov, while "Deep Blue" was the official name of every version of the program, including the one that beat Kasparov.

It's also not quite useful to compare the points spreads of a chess tournament versus a Jeopardy tournament, since the games and scoring systems are so different. The chess scores you cite are based on counting the winners of individual matches; the closest comparison would be to Jeopardy's individual rounds, of which there were also six in the Watson tournament: two Jeopardy Rounds, two Double Jeopardy Rounds, and two Final Jeopardy Rounds. Watson had its fair share of difficulties: counting based on money won during each round, Watson won three, tied one (the first Jeopardy Round), and lost two (the second Jeopardy Round and the first Final Jeopardy Round). Nor was this quite, as you say, IBM's first demonstration of Watson — just the first to appear as an official match on Jeopardy!; in fact, Watson competed against many former human Jeopardy contestants to help IBM improve its many previous iterations of Watson.

The rest of this comment is basically addressed in my original post and subsequent comments. The basic argument seems to be that "Watson is the first significant step towards a computer that truly processes human language." Watson is nowhere near the first step towards being able to do some processing of natural language, there having been attempts and significant advances made for 50 years. (Just take a look at Google Translate for evidence of the results.) Nor does it seem to innovate any qualitatively new methods, but just improves on existing ones: Chapter 22 of Russell & Norvig, the standard textbook on AI, offers the basic blueprint for how to create a Watson.

As for a computer that actually understands natural language, equivalent to human capability: no computer comes close today, and neither does Watson, which was the main point my post was meant to illustrate. Artificial intelligence researchers — at least, those not directly involved in this project or with some other incentive for exaggerating its results — seem to agree that this is an improvement upon existing methods, and a novel show of what those methods can achieve, but nothing close to an architecture for general understanding of natural language.