[Continuing coverage of the 2009 Singularity Summit.]

Next up, David Chalmers. He’s a rock-star philosopher, like Ian Malcolm. The title of his talk is “Simulation and the Singularity.” (Abstract and bio here.)

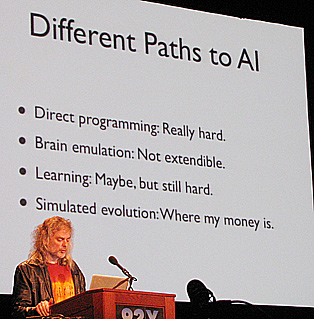

Chalmers isn’t talking about the philosophical requirements of simulation. He’s breaking down the claims behind the exponential growth claim of Singularity-pushers — the idea that once we make our computers have a certain level of intelligence (something past human-level), the pace will take off as the intelligence improves upon itself. This requires, he says, an extendible method, which neither biological reproduction nor brain emulation is.

The only way the Singularity will happen, he says, is through self-amplifying intelligence. The general requirement is just that an intelligence be able to create an intelligence smarter than itself. The original intelligence itself need not be very smart. Direct human methods won’t do it. The most plausible way, he says, is simulated evolution. If we arrive at above-human intelligence, he says, it seems likely it will take place in a simulated world, not in a robot or in our own physical environment.

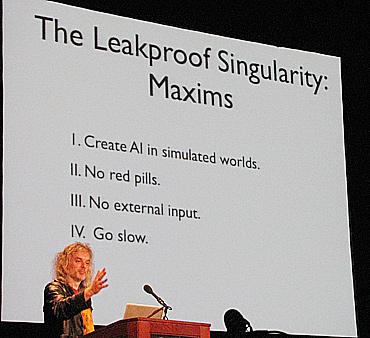

Now he’s getting to practical and philosophical implications if this were to happen, asking “how can we negotiate the Singularity, to maximize the chances of a valuable post-Singularity world.” He says that one possibility would be to put constraints on the intelligences we create, but that that would be impractical because they’re so difficult to predict. The other option, he says, is ongoing control, whereby we would monitor new intelligences as they come along, and would terminate undesirable intelligences. This is another point, he says, in favor of simulated intelligence.

(One critical objection: Is human-level intelligence in computers really possible? Let’s put aside the questions about replicating and simulating the human brain and just discuss intelligence in the abstract. There is still a basic requirement, in order to simulate something approaching human-level intelligence, of discerning its computable function. If we can’t figure it out from the brain or from our own direct engineering — as Chalmers confirms has been so difficult — how else are we going to do it? None of the A.I. programs we have created so far have been smart enough to create something smarter than themselves.)

Chalmers is concluding now with moral/ethical questions. How do we integrate into the post-Singularity world? Separatism, inferiority, extinction, or integration are our alternatives, he says. He prefers the last: we upload and self-enhance. That option, he says, raises some big questions, like will an upload system be conscious? And will it be me? (This, he says, is where it gets interesting for him, as a philosopher of mind.)

We don’t have a clue how a computational system could be conscious, he says, but we also don’t have a clue how brains could be conscious. But he emphasizes that he doesn’t think there is a fundamental difference between hardware and wetware.

He says in a gradual uploading scenario, he thinks what’s most likely is that consciousness will stay equivalent, rather than fade out. Chalmers says that once people see that you are preserved after being uploaded, and once all your friends are doing it, you’ll have to too. Goodie.

Chalmers concludes that super-A.I.s will be able to reconstruct us from existing recordings of us — things we’ve written, recordings of us, and so on. So what’s the best way we can reach the Singularity if it happens after our lifetimes — what’s the best way for us to be reconstructed? By giving talks at Singularity conferences, of course!

[UPDATE: Ray Kurzweil, in the talk he gives to end the first day of the conference, remarks on some of Chalmers’s comments.]

Futurisms

October 3, 2009

Very interesting that they're trying to find a way to be 'reconstructed' if the Singularity occurs after they die. Perhaps they ought to consult some Christian philosophers who have wrestled mightily with the problem of identity in the context of the Resurrection of the dead, such as Peter van Inwagen. For the problem, of course, is that if the super-AIs (be they God or the Architect of the Matrix) can reconstruct *one* of you and it's supposed to be you, what happens if they reconstruct *two*, or twenty, of 'you'? There would be no criteria by which to say that one instead of the rest if actually you.

Then again, unlike Christians, perhaps Singularitarians would be quite happy with the prospect of potentially infinitely many copies of their present self running around in the distant future ..

The difficulties present in the various paths to AI are one of the larger reasons why I believe augmenting our brains is much more likely to happen first. Every time we've tried to study a system to try and learn more about building AIs (for example, chess) we've ended up learning much more about the system, and how to create a specialized decision process for that system – but still nothing about AIs in general. At some point we will understand ourselves enough to be able to construct a consciousness, but that time is a long way off.

@Brian Boyd: This is a good point, and something Chalmers did touch on in his talk. I also heard a lot of other people discussing this one, and talked about it at some length with another conferencegoer (the British cabin builder I mentioned in a previous post). My thought is basically the same as what you say: there's no sensible way to discern copies from originals if you makes a molecularly identical copy of someone. This is one of those points where you have to look at the premises of the question and instead of saying, how are we going to deal with this problem?, say, the ethical intractability of this problem is just one more reason we shouldn't allow this scenario to occur in the first place.

@taoist: I quite agree with you that brain augmentation is much more feasible than strong AI. It still suffers from some of the same general problems, though, in which we are required to understand the brain as a computer system when it very well might not be.