[Continuing coverage of the 2009 Singularity Summit in New York City.]

Eliezer Yudkowsky, a founder of the Singularity Institute (organizer of this conference), is up next with his talk, “Cognitive Biases and Giant Risks.” (Abstract and bio.)

Eliezer Yudkowsky, a founder of the Singularity Institute (organizer of this conference), is up next with his talk, “Cognitive Biases and Giant Risks.” (Abstract and bio.)He starts off by talking about how stupid people are. Or, more specifically, how irrational they are. Yudkowsky runs through lots of common logical fallacies. He highlights the “Conjunction Fallacy,” where people find a story more plausible when it includes more details, when in fact a story becomes less probable when it has more details. I find this to be a ridiculous example. Plausible does not mean probable; people are just more willing to believe something happened when they are told that there are reasons that it happened, because they understand that effects have causes. That’s very rational. (The Wikipedia explanation, linked above, has a different explanation than Yudkowsky’s that makes a lot more sense.)

Yudkowsky is running through more and more of these examples. (Putting aside the content of his talk for a moment, he comes across as unnecessarily condescending. Something I’ve seen a bit of here — the “yeah, take that!” attitude — but he’s got it much more than anyone else.)

He’s bringing it back now to risk analysis. People are bad at analyzing what is really a risk, particularly for things that are more long-term or not as immediately frightening, like stomach cancer versus homicide; people think the latter is a much bigger killer than it is.

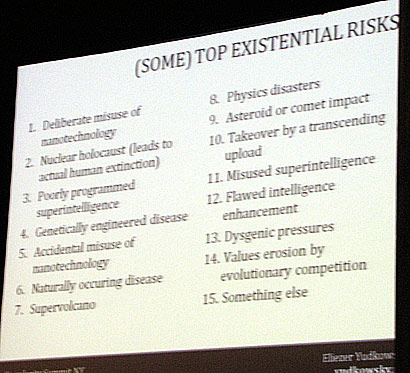

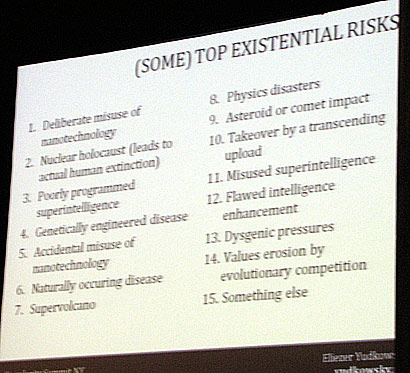

This is particularly important with the risk of extinction, because it’s subject to all sorts of logical fallacies: the conjunction fallacy; scope insensitivity (it’s hard for us to fathom scale); availability (no one remembers an extinction event); imaginability (it’s hard for us to imagine future technology); and conformity (such as the bystander effect, where people are less likely to render help in a crowd).

[One of Yudkowsky’s slides.]

Yudkowsky concludes by asking, why are we as a nation spending millions on football when we’re spending so little on all different sorts of existential threats? We are, he concludes, crazy.

That seems at first to be an important point: We don’t plan on a large scale nearly as well or as rationally as we might. But just off the top of my head, Yudkowsky’s approach raises three problems. First, we do not all agree on what existential threats are; that is what politics and persuasion are for; there is no set of problems that everyone thinks we should spend money on; scientists and technocrats cannot answer these questions for us since they inherently involve values that are beyond the reach of mere rationality. Second, Yudkowsky’s depiction of humans, and of human society, as irrational and stupid is far too simplistic. And third, what’s so wrong with spending money on football? If we spent all our money on forestalling existential threats, we would lose sight of life itself, and of what we live for.

Thus ends his talk. The moderator notes that video of all the talks will be available online after the conference; we’ll post links when they’re up.

Futurisms

October 4, 2009

"mere rationality"? I can tell you do not value reason as much as the average scientist (or technocrat). I wouldn't say the average human being is crazy, but I do agree with his idea that what currently counts as sane is set by a very low bar.

It was a 20 minute talk, so I guess some details got left out (this is consistent with his personality, I have read his website/blog for years, he is very exacting).

I have heard also that yudkowsky is too condescending. I've never met him but now I believe it a little more.

I certainly value reason of the sort that scientists do–in fact, I am a scientist by training. But I also value reason of a different sort: the kind that is value-laden, that most scientists explicitly claim is irrational and yet use anyway, in an impoverished form, in their everyday discourse. The scattered attempts at reviving utilitarianism evident in this summit (e.g. Anna Salamon's talk) are but one example.

I certainly won't disagree that people make a lot of very poor and senseless decisions, particularly on a societal and global level. But the sort of hyper-rational project that Yudkowsky, Salomon, and the like want to institute was shown by philosophers decades ago to be impossible. And the continued pursuit of a project that is both incoherent and uninterested in engaging with human cultural and social values does far more harm than good.

I am not saying you advocate soccer (or shopping at macy's, or linedancing…) over preparing for the unthinkable.

But I am saying is that you perpetuate a thoughtless, heedless, somewhat obliviously innocence so common with medieval serfs who thought that all woe was determined by g-d and there was nothing you could do about it.

There is a word for that, and it is immaturity. Another word would be cavalier, and I am sure 'apathic' applies as well.

A good home-owner insures his abode. Look at the category of people that do not (or can not) insure against risks, and the argument they use. Now look at the category of people who do. In which category do your arguments belong?