[Continuing coverage of the 2009 Singularity Summit.]

Up next, all the way from Australia, is Marcus Hutter on “Foundations of Intelligent Agents.” (Abstract and bio, plus his homepage.) He says that the topic of his talk is not enhancing natural systems, but in designing first principles for creating artificial intelligence from the ground up — only, at most, inspired by nature. In other words, not much novel or unexpected is likely to follow in his talk. Although the guy has an engaging stage present (with a cool accent), and his dress and presentation are quite colorful, literally.

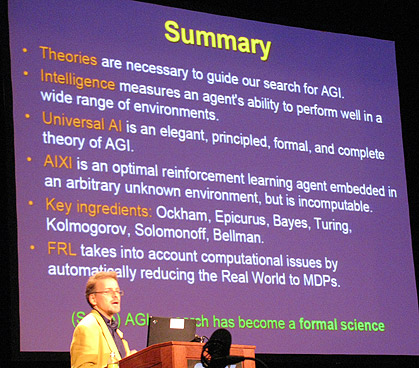

Yeah, he’s been going over Ockham’s Razor, induction, and Turing Machines for the first ten minutes. He’s starting to build it up now, showing how the basic elements of decision theory can be put together, and now he’s introducing an “extremely general framework” for an agent model.

A baby starts cooing in the audience, and Hutter pauses, and asks, “Is there a question?” Aww, very cute. Sort of weird to think of someone with a baby coming to the Singularity Summit, though. (Some sociological thoughts on that, perhaps, at a later date.)

Back to the matter at hand: Hutter says that the basic approach of his system, “Feature Reinforcement Learning,” is to reduce a real-world problem to a computational decision space. He’s basically presenting here a formalization of the engineering process of A.I. It seems like he’s trying to introduce a formalism to determine what the qualities will be of an advanced intelligence: Will it like drugs? Procreation? Suicide? Self-improvement? The answers can be determined through that “decision space” he talked about, by optimizing the rewards for the intelligent agent. John Stuart Mill, eat your heart out.

He claims this system could be used to determine what the Singularity will look like — whether the intelligence will be like the Borg and want to assimilate, whether it will be benevolent, and so forth. Among other things, his formalism defines a space of possible intelligence programs, and includes an optimal intelligence program known as AIXI. He says this means that there is an upper bound on possible intelligence, and if we could ever determine what AIXI is, we might know what that upper bound is, and it could be possible that it’s short of Singularity-level intelligence. I have some serious doubts about the limits that computable functions impose on what we could know through such a formalism (beyond the various absurdities of the formalism itself), but I’ll not go into those for now.

He concludes by saying that AGI (artificial general intelligence research) — or at least some of it — has become a form of science. Interesting to hear someone implicitly acknowledge that what’s been done so far is very much not science, but engineering.

Futurisms

October 3, 2009